TL;DR The most challenging parts of developing TRAUMA for iOS was fast blur with a big radius and realtime video into texture streaming with synchronized audio.

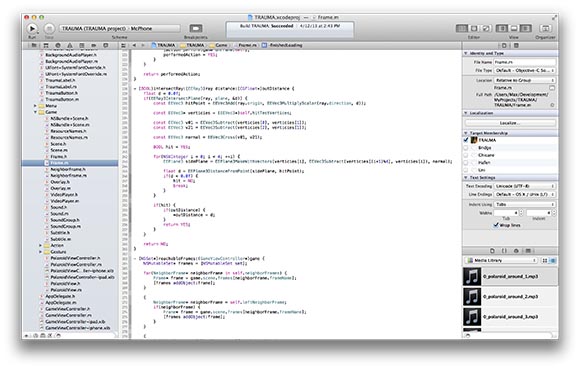

A sample of the sourcecode of TRAUMA iOS. This particular function is to detect which image was selected by the player.

The original TRAUMA was completely created by Krystian and is using Flash as base technology. Even if there are ways to compile Flash directly into an iOS app, it seems to not the way to go for a game like ours. TRAUMA seems to be simple but it is based on some really performance intensive effects. It is a 3D game with a lot of blurring and video textures.

We wanted to create an iPad version and so I started from scratch. Later when we saw that the gesture painting is working well on smaller screens we decided to support iPhones and iPod touches too.

In the end the game is based on a C core for math and Objective-C for all the device specific components and the menu. The result is a portable core without losing the look and feel of a native iOS application.

You might ask why we didn’t use an engine. The popular cocos2d is obviously a 2d engine. Unity has no support for video textures and also I’m not a big fan this engine. The unreal UDK was not available at this moment. Also I don’t want to use a multiple platform engine because I would lose control over the user interface which should consist of iOS standard elements.

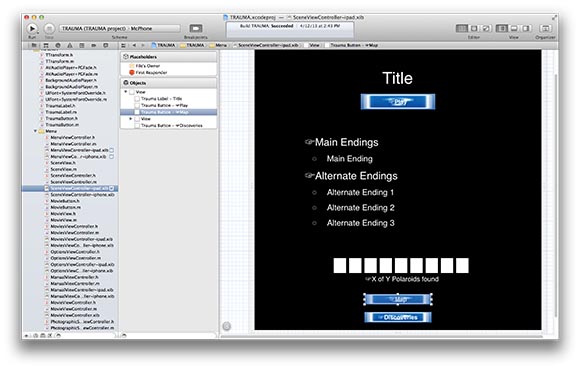

Redesigning the menu

The original menu was to fiddly for iPad. I started before the first retina iPad and drawing all the small font didn’t work out. Still I wanted to keep the four separated scenes and the direct access to options and the movie gallery.

Krystian is very picky when it comes to user interfaces and it took multiple iterations before the current sliding menu was finished. Now the only drawback to the original is that you cannot view all discoveries at once.

“Krystian is very picky when it comes to user interfaces…”

Having a nice interactive menu is an overlooked component of many games. The menu is one of the first things you will see in a game and it should fit into by having the same art style, using the same input methods and also shouldn’t break with the expected behavior of the platform. There is no good menu that is the same on a touch-based and a cursor-based interface.

Soon after the release of TRAUMA people started to ask for and iOS version and the game seems to be perfectly made for touch, but it was not. In the original version you use the mouse cursor to find frames, click on them to go there and hold down the mouse button to paint a gesture. But there is no hovering on a touch screen. Either you have your finger on the device or there is no input at all.

In my first implementation I used a button to switch between search and paint mode, but it felt totally awkward. I also played around with using a second finger to switch between the input modes. Than I came up with the idea of tap and hold to switch. You start with searching for frames and could enter gesture paint mode when you shortly paused your motion. This was similar to the original version.

But the first implementation that convinced Krystian was when we started with gesture mode and used hold to switch to search mode. This also radically changed the gameplay. On iOS you are using far more gestures to navigate between frames because you are starting with gesture painting. It is also much more likely that you discover the move left, right and back gestures on your own.

Fading out gesture paint and hold to flash frames were implemented thanks to your fine beta testers. Many had problems to enter the search mode. Before that there was a lot of random tapping to find neighbor frames.

Writing a gesture recognizer

When you start with gesture detection you might think it is some dark voodoo magic. But it turns out to be pretty straight forward in the end. A good entrance point is “Easily Write Custom Gesture Recognizers”. Even if the final algorithm used in TRAUMA is slightly different, you will get the idea.

Detecting gestures is one of the crucial tasks in TRAUMA. So I spend a lot of time optimizing the gesture recognizer until it has a pretty high level of tolerance. Nothing is more frustrating to paint a gesture and than it is not recognized. On the other side I tried to ensure that random painting is not accidentally treated as a gesture.

Finding the balance here is pretty hard and I know that TRAUMA sometimes fails to detect an obviously correct gesture. I still try to improve this and we hopefully will ship an update later.

I have written a UIGestureRecognizer subclass that can be used in each iOS application. When I’m happy with the results and have cleaned up the code I will push it to GitHub.

“Detecting gestures is one of the crucial tasks in TRAUMA.”

Minimal loading times

I wanted the iOS version to have no loading times at all. Everyone hates loading times and so I tried to keep them as low as possible.

Since each frame in TRAUMA is unique and the iOS devices only have a small amount allocatable of memory compared to a desktop, it is impossible to keep a whole scene in memory at once.

Therefore I have written a texture streaming engine. When you enter a frame all neighbor frames will be loaded. When a certain amount of textures is loaded the ones that are more than two steps away from the current frame are unloaded. Also when memory is low on the device all frames except the current one and its neighbors will be discarded.

The same is true for audio assets. Only the focus and gesture sounds are preloaded at start. All others are streamed. This is also true for the videos.

Even the two seconds of blank screen when starting a scene is a delay Krystian wanted to have, so you are not instantly dropped into the game.

There are only a few games on iOS that use the benefits of dynamic texture loading. This is kind of sad. Loading times can make a huge difference when it comes to the gaming experience.

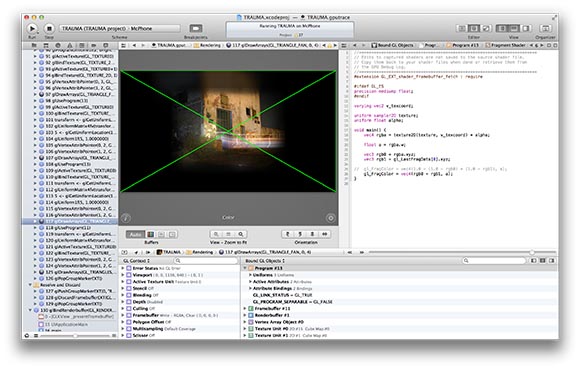

High performance, big radius blur

When you hold down your finger for a short moment before you can search for frames by sliding over the screen. When you hit a frame it will be focused. Technically it changes from a blur with a big radius to a blur with a very small radius. Blur is a highly computing intensive task.

TRAUMA is using an optimized two-pass gaussian blur with a constant sized filter kernel. Also the frame is rendered into the scene before the blur is performed. Projecting your image before blurring it is an additional pass but the result is much better for images that are not parallel to the screen plane.

I spend a lot of time optimizing the blur algorithm and parameters. But even if all neighbor frames are flashing there is no drop in the frame rate.

I will write a full article about high performance blurring with shaders in my blog soon.

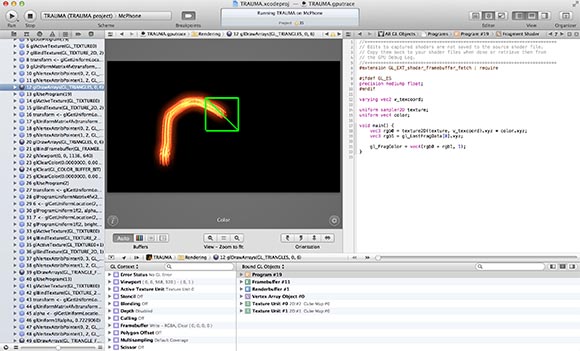

Realtime video texture streaming

Another big challenge on iOS was streaming video into textures. When I started developing with iOS 5 the API was not capable of grabbing realtime frames from a video. I used AVAssetReaderOutput in the beginning but it had some major issues when you need to drop a frame. But thanks to the fine folks at Apple iOS 6 finally offered AVAssetReaderOutput, exactly what we were looking for.

This class delivers the image you need right now. It supports frame dropping and also takes care of audio and video synchronization.

Sadly you have some serious hangs, up to 3 seconds if you use two instances of this class at the same time. Therefore if you leave a video frame in TRAUMA the playback is instantly stopped and the next video texture is loaded. You might notice this in the first scene when you toggle the frames with the stacked spheres. But this is only a small drawback.

Summary

After all the biggest challenge was to understand the existing XML-files with a left handed coordinate system, a ton of exceptions and strange attributes like uptothisone="true" while all documentation only existed in Krystian’s head and sometimes no even there.

Still we had a lot of fun porting TRAUMA even if we needed to fight over some details. We also improved some minor parts: the photo previews are now unique images instead of empty frames and you can explore the location in Apple Maps when you have found all photos in scene.

I spend a lot of my spare time to make this game possible. I like technical challenges as much as making games. I have learned a lot and if you want more detailed information about the implementation just drop me a line on ADN or Twitter.

You can play TRAUMA on iOS here